No rush at all (I am about to head home for the day)! I appreciate all the time you have spent helping.

My current goal is the same one I started with. Its been a fun puzzle  .

.

I am just looking to setup distributed monitoring. Master communicates with Satellites which communicate with Agents. Currently the setup is just a simple POC setup. Master server, Satellite server, Windows agent, Linux agent, and a Meraki switch are the only devices involved here. All three of those agents should only ever be checked by the Satellite. Satellite reports back to Master. Master displays the dashboard. The checks being performed are just ping (hostalive), ssh (check-ssh), http (check-http), and winrm (check-winrm).

Have a wonderful day!

-Adam

Yes, the reason:

The Icinga 2 hierarchy consists of so-called zone objects. Zones depend on a parent-child relationship in order to trust each other.

found here: Distributed Monitoring - Icinga 2

I am not seeing any way to get command_endpoint = host.vars.agent_endpoint into the configuration with Director UI. I can’t change it manually because Director will just change it back.

Ah yes true, that might be a director limitation. and it want so set it to host.name which would work too that is what you should see when you do run on agent = yes

I do not see any weird stuff specifically in your new config at frist glance it is a way better setup

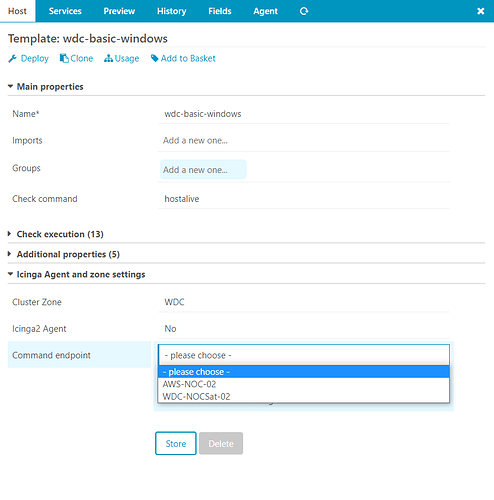

When selecting a command_endpoint I don’t have the ability to manually enter in a string like that unfortunately. It feels weird for Director to have that limitation.

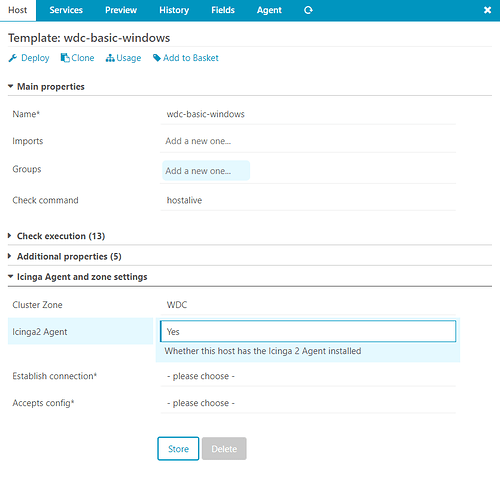

However, I can of course select run on agent = yes for all of the templates. This gets rid of the command_endpoint option.

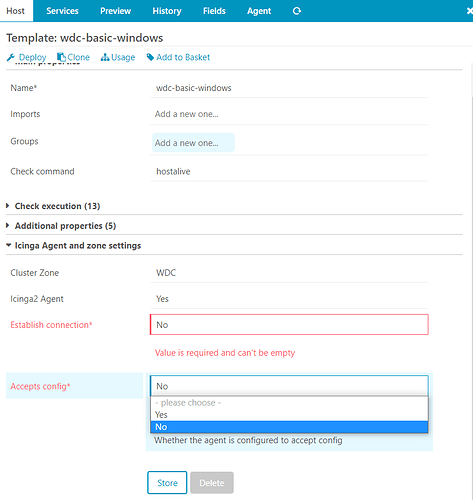

However this also forces me to select a value for establish connection and accepts config. I am selecting no here because these agents do not have icinga installed. Goes back to the goal of not needing to have to install icinga on every host I want to monitor. Just need the satellites to do ping/http/winrm/ssh checks.

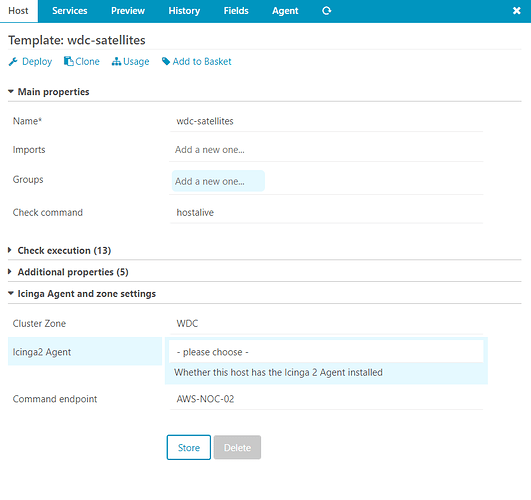

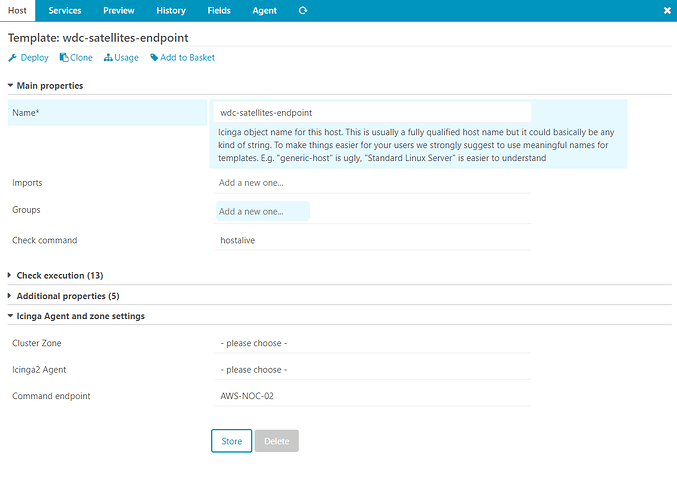

Now for the host template that is applied to the satellite host, if I tell it that the icinga agent is installed (which it is since it is a satellite), I cannot tell it that its command endpoint is master.

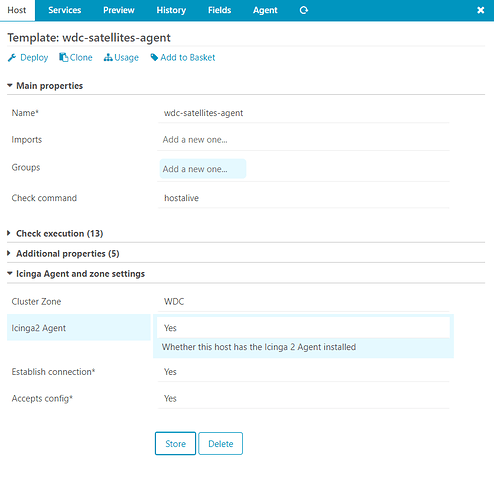

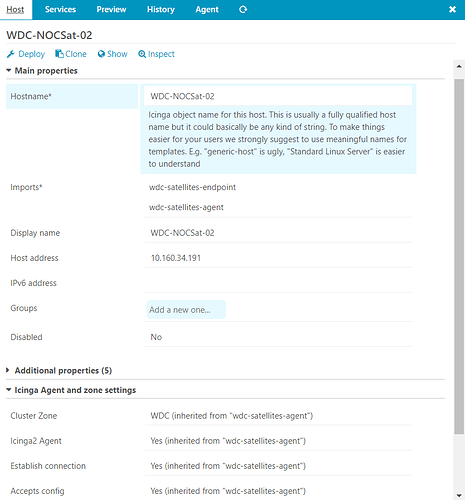

I can however add multiple templates to a host so let me try that. I will use the above template to tell the satellite that it is in the WDC zone and its command endpoint is AWS-NOC-02 (master). Then I will use this 2nd template to tell icinga that the satellite has an agent installed and accept configuration.

Let’s see if this will deploy. Nope.

information/cli: Icinga application loader (version: r2.8.1-1)

information/cli: Loading configuration file(s).

information/ConfigItem: Committing config item(s).

information/ApiListener: My API identity: AWS-NOC-02

critical/config: Error: Validation failed for object 'WDC-NOCSat-02' of type 'Host'; Attribute 'command_endpoint': Command endpoint must be in zone 'WDC' or in a direct child zone thereof.

Location: in [stage]/zones.d/WDC/hosts.conf: 22:1-22:27

[stage]/zones.d/WDC/hosts.conf(20): }

[stage]/zones.d/WDC/hosts.conf(21):

[stage]/zones.d/WDC/hosts.conf(22): object Host "WDC-NOCSat-02" {

^^^^^^^^^^^^^^^^^^^^^^^^^^^

[stage]/zones.d/WDC/hosts.conf(23): import "wdc-satellites-endpoint"

[stage]/zones.d/WDC/hosts.conf(24): import "wdc-satellites-agent"

critical/config: 1 error

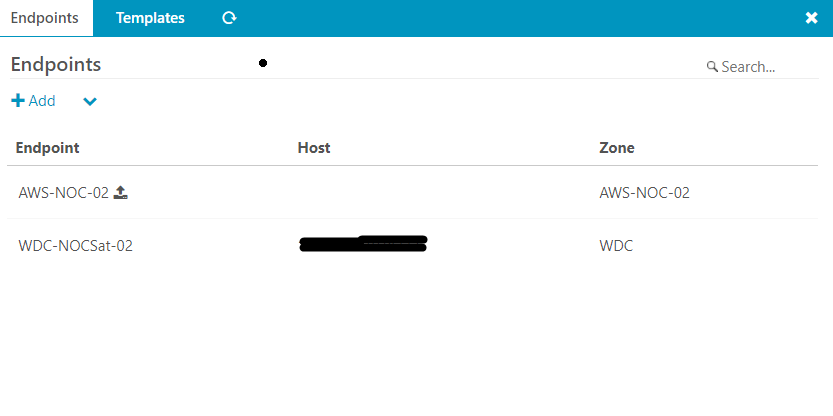

So the command_endpoint, which in this case is AWS-NOC-02, needs to be in the WDC zone. But there is no way it can be in that zone because it is the master and in its own zone. If we look at the endpoints we can see that it is not in the WDC Zone.

Lets go ahead and revert the template back to what it use to be and then redeploy so that we can see the other changes

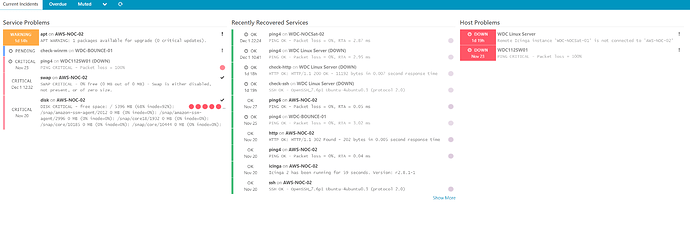

Unfortunately, despite these changes, the dashboard appears to be the same

I wonder what I am missing. I will have to continue to tinker with this

Hi @hewithaname

this:

critical/config: Error: Validation failed for object 'WDC-NOCSat-02' of type 'Host'; Attribute 'command_endpoint': Command endpoint must be in zone 'WDC' or in a direct child zone thereof.

Location: in [stage]/zones.d/WDC/hosts.conf: 22:1-22:27

[stage]/zones.d/WDC/hosts.conf(20): }

[stage]/zones.d/WDC/hosts.conf(21):

[stage]/zones.d/WDC/hosts.conf(22): object Host "WDC-NOCSat-02" {

Is a sneaky problem I ran into this last week

No idea how to fix it in the director but I configure this:

object Host "l" {

import "satelite-host"

// Host Details

icon_image = "tux.png"

display_name = ""

address = ""

// Assign variables

vars.os = "Linux"

vars.type = "Satelite"

vars.env = "PROD"

vars.agent_endpoint = name

vars.purpose = "Icinga_Satelite"

vars.zone = "AW-US"

zone = "master"

where:

zone = "master"

solves that sticky problem

1 Like

Very interesting! That’s good information to have. I think the next best steps are to do some manual configuration of the conf files to establish a proper setup between the master and satellites and then use director kickstart to import them as objects. That would bypass a lot of the issues we are seeing here.

.

.