Cluster

The cluster this is happening in

- is 2 ha masters and 2 satellites.

- The masters use Icingadb, with icingadb-redis, as their backend

- The data is stored in a 3 node galera cluster

- There are about 20,000 hosts in the cluster and 55,000 services

- A small chunk of those hosts update passively and the passive check on update of the checked host state doesn’t match the current Icinga2 api host state

Problem

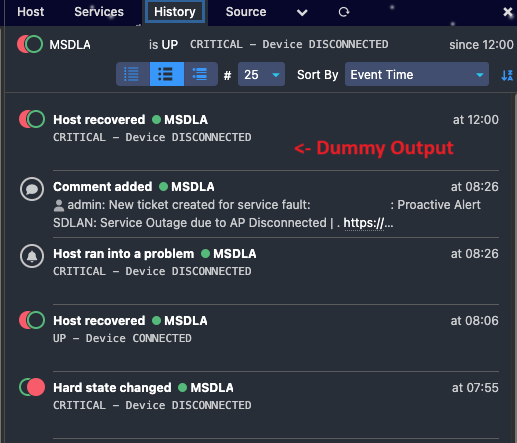

The data from the api will claim one state and check time and the database contains a different state and check time.

It appears to only be a small percentage (~400 hosts) of all check results that have this problem and we only noticed it because of some of the hosts update their state passively and the passive update is only triggered if the checked state and api state were different.

Here is an example of what we are seeing, the api data is from a Icinga api (5665) request and the db data is from a SQL query.

In this example the api has a result and but the DB is reporting that a check has never been run.

hidden_hostname1: api = 0 @ 2025/02/24 21:04:18 | 2025/02/24 21:04:18; db = 99 @ Invalid timestamp | 1970/01/01 00:00:00

{'api': {'display_name': 'hidden_hostname1', 'last_check': 1740431058.718439, 'last_state': 1, 'last_state_change': 1740431058.718439, 'state': 0}, 'db': {'name': 'hidden_hostname1', 'hard_state': 99, 'soft_state': 99, 'check_attempt': 1, 'last_update': None, 'last_state_change': 0}}

Here is another example showing the db with more recent data than the api

hidden_hostname2: api = 1 @ 2024/12/12 04:52:04 | 2024/12/12 04:52:04; db = 0 @ 2024/12/12 04:57:04 | 2024/12/12 04:57:04

{'api': {'display_name': 'hidden_hostname2', 'last_check': 1733979124.328236, 'last_state': 0, 'last_state_change': 1733979124.328236, 'state': 1}, 'db': {'name': 'hidden_hostname2', 'hard_state': 0, 'soft_state': 0, 'check_attempt': 1, 'last_update': 1733979424496, 'last_state_change': 1733979424496}}

If I look at the times these problems occurred the majority of them occurred at the same point in time suggesting there was a problem affect multiple things.

However there were about half a dozen other conflicting results that were all at different times to each other.

Things I’ve tried

I haven’t been able to find a known issue or find this problem in other clusters we look after (yet, active checking masks the problem with new good check results).

I’ve tried to clear the redis cache and restart redis, icingadb and icinga2 on both headends (one after the other). This allowed the hosts to display the data from the database again (even if that was wrong) which triggered the passive check to post a new check result but new bad results were created after that point.

I’ve checked the cluster for consistency and the sql on all hosts in the cluster and gotten the same result.

At this point I’m not even sure if this is redis, icingadb or the database that is causing the issue though I’m leaning towards redis or icingadb simply because sometimes the API is behind the database.

versions

icinga2 version

icinga2 - The Icinga 2 network monitoring daemon (version: r2.14.3-1)

Copyright (c) 2012-2025 Icinga GmbH (https://icinga.com/)

License GPLv2+: GNU GPL version 2 or later <https://gnu.org/licenses/gpl2.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

System information:

Platform: Debian GNU/Linux

Platform version: 12 (bookworm)

Kernel: Linux

Kernel version: 6.1.0-21-amd64

Architecture: x86_64

Build information:

Compiler: GNU 12.2.0

Build host: runner-hh8q3bz2-project-575-concurrent-0

OpenSSL version: OpenSSL 3.0.15 3 Sep 2024

Application information:

General paths:

Config directory: /etc/icinga2

Data directory: /var/lib/icinga2

Log directory: /var/log/icinga2

Cache directory: /var/cache/icinga2

Spool directory: /var/spool/icinga2

Run directory: /run/icinga2

Old paths (deprecated):

Installation root: /usr

Sysconf directory: /etc

Run directory (base): /run

Local state directory: /var

Internal paths:

Package data directory: /usr/share/icinga2

State path: /var/lib/icinga2/icinga2.state

Modified attributes path: /var/lib/icinga2/modified-attributes.conf

Objects path: /var/cache/icinga2/icinga2.debug

Vars path: /var/cache/icinga2/icinga2.vars

PID path: /run/icinga2/icinga2.pid

icingadb version

Icinga DB version: v1.2.0

Build information:

Go version: go1.22.2 (linux, amd64)

Git commit: a0a65af0260b9821e4d72692b9c8fda545b6aeca

System information:

Platform: Debian GNU/Linux

Platform version: 12 (bookworm)

icingadb-redis version

Redis server v=7.2.6 sha=4e7416a9:0 malloc=jemalloc-5.3.0 bits=64 build=8aaa39c6119e2eaf