icinga2 --version output:

icinga2 - The Icinga 2 network monitoring daemon (version: r2.11.0-1)

Copyright (c) 2012-2019 Icinga GmbH (https://icinga.com/)

License GPLv2+: GNU GPL version 2 or later http://gnu.org/licenses/gpl2.html

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

System information:

Platform: Raspbian GNU/Linux

Platform version: 10 (buster)

Kernel: Linux

Kernel version: 4.19.66-v7+

Architecture: armv7l

Build information:

Compiler: GNU 8.3.0

Build host: runner-LTrJQZ9N-project-297-concurrent-0

Application information:

General paths:

Config directory: /etc/icinga2

Data directory: /var/lib/icinga2

Log directory: /var/log/icinga2

Cache directory: /var/cache/icinga2

Spool directory: /var/spool/icinga2

Run directory: /run/icinga2

Old paths (deprecated):

Installation root: /usr

Sysconf directory: /etc

Run directory (base): /run

Local state directory: /var

Internal paths:

Package data directory: /usr/share/icinga2

State path: /var/lib/icinga2/icinga2.state

Modified attributes path: /var/lib/icinga2/modified-attributes.conf

Objects path: /var/cache/icinga2/icinga2.debug

Vars path: /var/cache/icinga2/icinga2.vars

PID path: /run/icinga2/icinga2.pid

This is what the master shows on the time that the agents connects. On the master there are no traces of the agent trying to connect.:

[2019-10-24 18:41:29 +0100] information/ApiListener: New client connection from [x.x.x.x]:45492 (no client certificate)

[2019-10-24 18:41:29 +0100] information/HttpServerConnection: Request: POST /v1/actions/process-check-result (from [x.x.x.x]:45492), user: root, agent: curl/7.64.0).

[2019-10-24 18:41:29 +0100] information/HttpServerConnection: HTTP client disconnected (from [x.x.x.x]:45492)

[2019-10-24 18:41:35 +0100] information/ApiListener: New client connection from [x.x.x.x]:45508 (no client certificate)

[2019-10-24 18:41:35 +0100] information/HttpServerConnection: Request: POST /v1/actions/process-check-result (from [x.x.x.x]:45508), user: root, agent: curl/7.64.0).

[2019-10-24 18:41:35 +0100] information/HttpServerConnection: HTTP client disconnected (from [x.x.x.x]:45508)

[2019-10-24 18:41:38 +0100] information/ApiListener: New client connection for identity ‘agent02’ from [x.x.x.x]:43924

[2019-10-24 18:41:39 +0100] information/ApiListener: Sending config updates for endpoint agent02’ in zone ‘agent02’.

[2019-10-24 18:41:39 +0100] information/ApiListener: Syncing configuration files for global zone ‘global-templates’ to endpoint ‘agent02’.

[2019-10-24 18:41:39 +0100] information/ApiListener: Finished sending config file updates for endpoint ‘agent02’ in zone ‘agent02’.

[2019-10-24 18:41:39 +0100] information/ApiListener: Syncing runtime objects to endpoint ‘agent02’.

[2019-10-24 18:41:39 +0100] information/ApiListener: Finished syncing runtime objects to endpoint ‘agent02’.

[2019-10-24 18:41:39 +0100] information/ApiListener: Finished sending runtime config updates for endpoint ‘agent02’ in zone ‘agent02’.

[2019-10-24 18:41:39 +0100] information/ApiListener: Sending replay log for endpoint ‘agent02’ in zone ‘agent02’.

[2019-10-24 18:41:39 +0100] information/ApiListener: Finished sending replay log for endpoint ‘agent02’ in zone ‘agent02’.

[2019-10-24 18:41:39 +0100] information/ApiListener: Finished syncing endpoint ‘agent02’ in zone ‘agent02’.

[2019-10-24 18:41:39 +0100] information/JsonRpcConnection: Received certificate request for CN ‘agent02’ signed by our CA.

In short:

Agent thinks it’s connected. No traces of the agent on the master. Then the agent suddenly showing a disconnect (when the handshake fails?). Agent tries a reconnect to the master. The master receives the request and cluster health is restored.

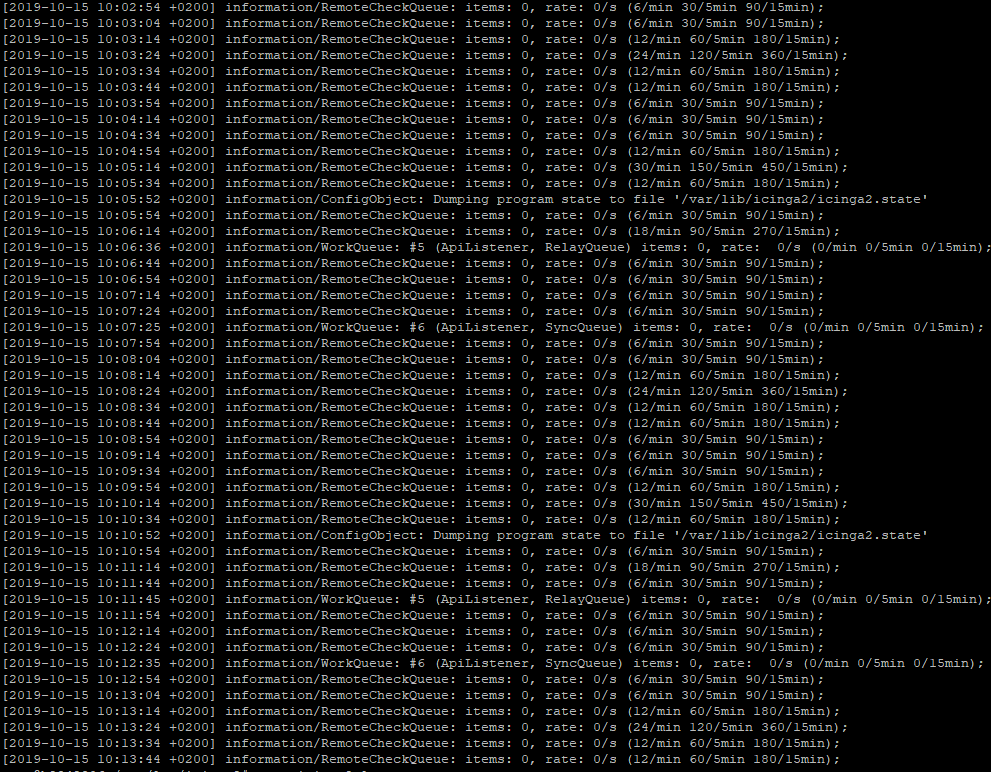

Looking at the agent history log again, it seemed to repeat this when it was not ‘really’ connected:

[2019-10-24 17:22:52 +0200] information/ApiListener: New client connection for identity ‘icinga’ to [x.x.x.x]:5665

[2019-10-24 17:22:52 +0200] information/ApiListener: Requesting new certificate for this Icinga instance from endpoint ‘icinga’.

[2019-10-24 17:22:52 +0200] information/ApiListener: Sending config updates for endpoint ‘icinga’ in zone ‘master’.

[2019-10-24 17:22:52 +0200] information/ApiListener: Finished sending config file updates for endpoint ‘icinga’ in zone ‘master’.

[2019-10-24 17:22:52 +0200] information/ApiListener: Syncing runtime objects to endpoint ‘icinga’.

[2019-10-24 17:22:52 +0200] information/ApiListener: Finished syncing runtime objects to endpoint ‘icinga’.

[2019-10-24 17:22:52 +0200] information/ApiListener: Finished sending runtime config updates for endpoint ‘icinga’ in zone ‘master’.

[2019-10-24 17:22:52 +0200] information/ApiListener: Sending replay log for endpoint ‘icinga’ in zone ‘master’.

[2019-10-24 17:22:52 +0200] information/ApiListener: Finished sending replay log for endpoint ‘icinga’ in zone ‘master’.

[2019-10-24 17:22:52 +0200] information/ApiListener: Finished syncing endpoint ‘icinga’ in zone ‘master’.

[2019-10-24 17:22:52 +0200] information/ApiListener: Finished reconnecting to endpoint ‘icinga’ via host ‘x.x.x.x’ and port ‘5665’

[2019-10-24 17:23:14 +0200] information/ConfigObject: Dumping program state to file ‘/var/lib/icinga2/icinga2.state’

[2019-10-24 17:23:15 +0200] information/WorkQueue: #6 (ApiListener, SyncQueue) items: 0, rate: 0/s (0/min 0/5min 0/15min);

[2019-10-24 17:23:15 +0200] information/WorkQueue: #5 (ApiListener, RelayQueue) items: 0, rate: 0/s (0/min 0/5min 0/15min);

[2019-10-24 17:23:52 +0200] information/JsonRpcConnection: No messages for identity ‘icinga’ have been received in the last 60 seconds.

[2019-10-24 17:23:52 +0200] warning/JsonRpcConnection: API client disconnected for identity ‘icinga’

[2019-10-24 17:23:52 +0200] warning/ApiListener: Removing API client for endpoint ‘icinga’. 0 API clients left.

[2019-10-24 17:24:01 +0200] information/ApiListener: Reconnecting to endpoint ‘icinga’ via host ‘x.x.x.x’ and port ‘5665’

[2019-10-24 17:26:12 +0200] critical/ApiListener: Cannot connect to host ‘x.x.x.x’ on port ‘5665’: Connection timed out

[2019-10-24 17:26:21 +0200] information/ApiListener: Reconnecting to endpoint ‘icinga’ via host ‘x.x.x.x’ and port ‘5665’

[2019-10-24 17:28:14 +0200] information/ConfigObject: Dumping program state to file ‘/var/lib/icinga2/icinga2.state’

[2019-10-24 17:28:25 +0200] information/WorkQueue: #6 (ApiListener, SyncQueue) items: 0, rate: 0/s (0/min 0/5min 0/15min);

[2019-10-24 17:28:25 +0200] information/WorkQueue: #5 (ApiListener, RelayQueue) items: 0, rate: 0/s (0/min 0/5min 0/15min);

[2019-10-24 17:28:31 +0200] critical/ApiListener: Cannot connect to host ‘x.x.x.x’ on port ‘5665’: Connection timed out

[2019-10-24 17:28:41 +0200] information/ApiListener: Reconnecting to endpoint ‘icinga’ via host ‘x.x.x.x’ and port ‘5665’

[2019-10-24 17:33:14 +0200] information/ConfigObject: Dumping program state to file ‘/var/lib/icinga2/icinga2.state’

[2019-10-24 17:33:35 +0200] information/WorkQueue: #5 (ApiListener, RelayQueue) items: 0, rate: 0/s (0/min 0/5min 0/15min);

[2019-10-24 17:33:35 +0200] information/WorkQueue: #6 (ApiListener, SyncQueue) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Is it possible that there are ‘ghost’ connections’ on a router that gives this problem?