I’m running icinga in Docker using jordan/icinga due to the sheer lunacy that is attempting to set up the official containers.

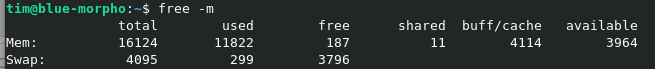

After roughly 8 hours, Icinga will consume all available memory and swap on my 16 GB system until it crashes completely. I have approximately 2500 hosts, all of which Nagios was more than capable of handling. We haven’t configured any services yet due to the, you know, ridiculous memory leak that renders the entire product unusable.

I’ve also noticed that it spins up an absurd number (HUNDREDS) of zombie processes.

We were working towards moving away from Nagios, but it’s looking like we won’t be doing that until this bug is fixed.

Hello,

Can you please provide us more factual informations (icinga version, system, setup, ps, crashdumps, etc … ) ?

If you think it is a memory leak, can you pinpoint the github issue you think it’s related to ?

Right now i admit i’m sceptical, i do run a 40k device icinga architecture over a dozen of poller and quite complex configurations, and i didn’t saw a serious memory leak in 3 years, on overall it’s running fine.

Also, you can mitigate this kind of problems and it’s impact in docker by limiting the max memory size of container in docker.

Sorry for the delay. I wanted to give a detailed response and that required waiting for the RAM to fill.

Currently running version 2.12.0 of Icinga inside Docker 19.03.8 on top of Ubuntu Server 20.04 running in a Xen host with 16 GB of memory and 8 CPUs.

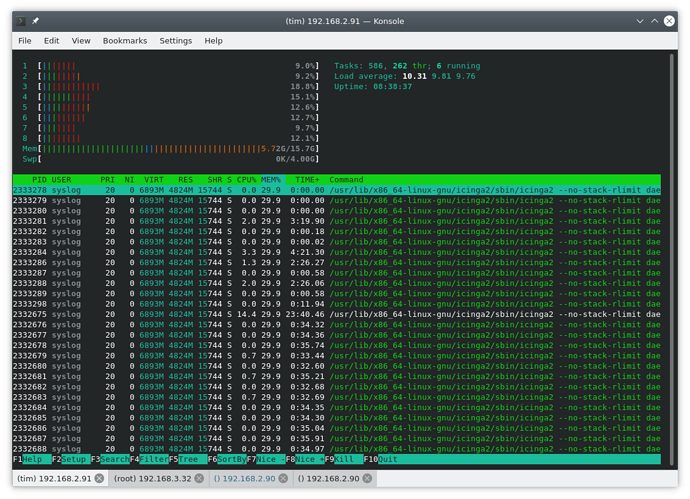

This is what htop shows after about 2 hours of having icinga running

And here’s the memory use the next day:

The only additional information I can give regarding the issue is that it only started once I made a sync job to import my host configs from a converted Nagios hosts.conf, but that may be because the system was mostly empty before then.

Another thing I noticed was that it’s spinning up over 400 ping processes that never seem to go away, and they all seem to be pinging IP addresses that correspond to downed hosts. The real memory hog is the Icinga2 process, which shows up about 15-20 times in htop but only 4 in ps.

I unfortunately didn’t grab the logs from when mysql crashed, but if you give me about 3 hours I’m sure it’ll come around again.

Thanks for the reply, that gives me a bit of context to start with.

The following thing bothers me :

I wont jump to conclusions yet, but it looks like a configuration problem to me, can you please provide following things :

- output of

icinga2 daemon -C -x debugcommand run on your master - a host config example you tried to sync

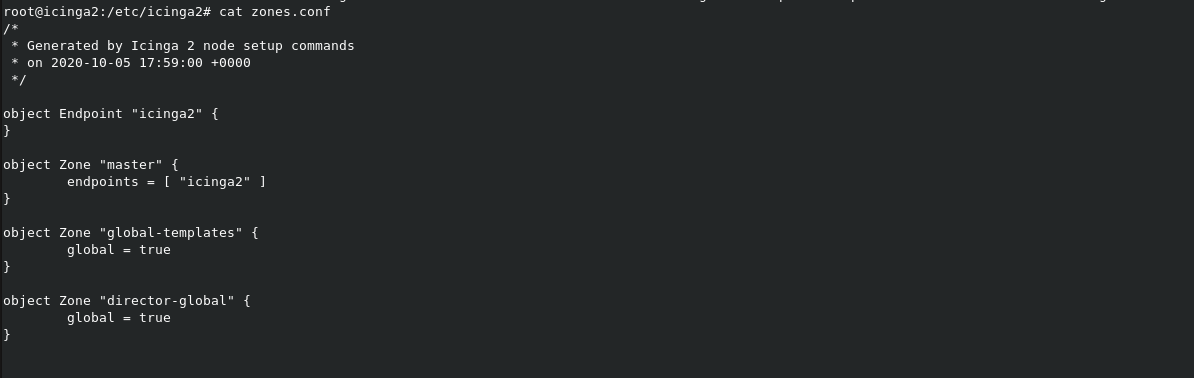

/etc/icinga2/zones.conffile on your master

By the way, how did you tried to sync your converted Nagios hosts.conf config ?

For this part, i dont know which executables or checkcommands you are using, but make sure icinga kill them after some time, example :

object CheckCommand "something" {

command = [ PluginDir + "/check_something" ]

arguments = {

"-H" = "$address$"

}

timeout = 30s

}

This is all I got from icinga2 daemon -C -x

The host config was converted to JSON using an in-house script, so I’m definitely not discounting the possibility that it’s the culprit somehow. I’m not sure how to show you a copy without giving out customer info, though, so I’ll have to get back to you with a redacted version. Either way, I tried to sync it using Fileshipper.

Here’s the contents of zones.conf

This output is expected, i was asking for

icinga2 daemon -C -x debug

No problem with that, you can give me an example with anonymized domain names/machines names with placeholders (you can massively sed the if you feel lazy ![]() ). The point is to see how the hosts are declared and what ressources they uses (templates, checkcommands, etc) which i’ll ask too.

). The point is to see how the hosts are declared and what ressources they uses (templates, checkcommands, etc) which i’ll ask too.

If ou are using fileshipper, you are also using director, right ? which version ?

Fom what i understand of your zones.conf, you have everything on a single icinga instance ? no distributed polling on different zones ?

Unfortunately… no.