Hello everyone,

I have created a test installation, with one master and two clients. I have configured both clients (should act as agents) on the master.

The goal is to make icinga2 reachable via the internet (to allow clients to connect to it, that are not reachable from the master).

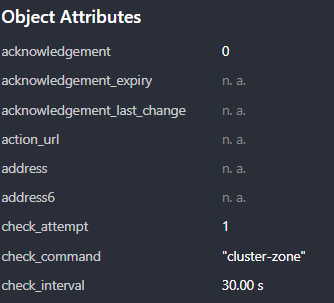

I found out that I have to create the agents as own zones with the check_command=“cluster-zone” so that I can check if the agent has logged on to the master.

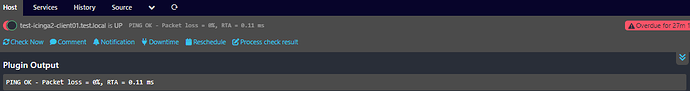

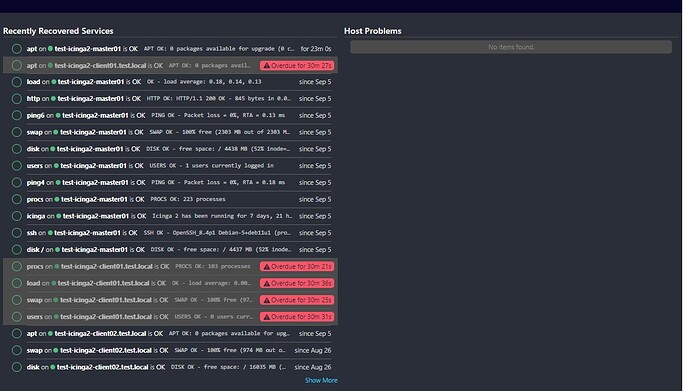

The only problem is that the checks become overdue when the client is offline, but the host is not flagged as down.

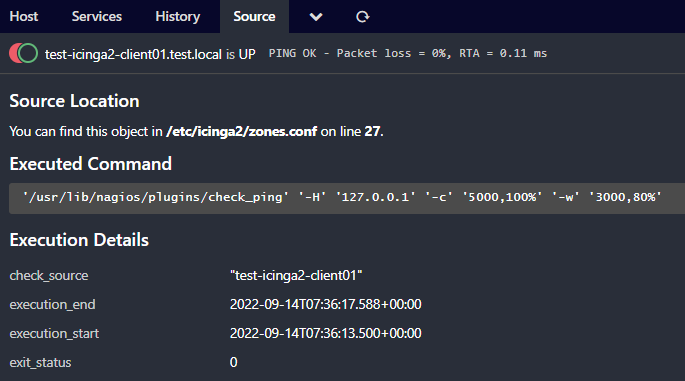

The host output doesn’t look right either. As i understood the command “cluster-zone” isn’t supposed to try a PING-command.

I have only one client shut down

Logs:

cat /var/log/icinga2/icinga2.log

connecting client

[2022-09-14 11:20:15 +0200] information/ApiListener: New client connection for identity 'test-icinga2-client01' from [::ffff:192.168.xxx.xxx]:36862

[2022-09-14 11:20:15 +0200] information/ApiListener: Sending config updates for endpoint 'test-icinga2-client01' in zone 'test-icinga2-client01'.

[2022-09-14 11:20:15 +0200] information/ApiListener: Syncing configuration files for zone 'test-icinga2-client01' to endpoint 'test-icinga2-client01'.

[2022-09-14 11:20:15 +0200] information/JsonRpcConnection: Received certificate request for CN 'test-icinga2-client01' signed by our CA.

[2022-09-14 11:20:15 +0200] information/JsonRpcConnection: The certificate for CN 'test-icinga2-client01' is valid and uptodate. Skipping automated renewal.

[2022-09-14 11:20:15 +0200] information/ApiListener: Finished sending config file updates for endpoint 'test-icinga2-client01' in zone 'test-icinga2-client01'.

[2022-09-14 11:20:15 +0200] information/ApiListener: Syncing runtime objects to endpoint 'test-icinga2-client01'.

[2022-09-14 11:20:15 +0200] information/ApiListener: Finished syncing runtime objects to endpoint 'test-icinga2-client01'.

[2022-09-14 11:20:15 +0200] information/ApiListener: Finished sending runtime config updates for endpoint 'test-icinga2-client01' in zone 'test-icinga2-client01'.

[2022-09-14 11:20:15 +0200] information/ApiListener: Sending replay log for endpoint 'test-icinga2-client01' in zone 'test-icinga2-client01'.

[2022-09-14 11:20:15 +0200] information/ApiListener: Finished sending replay log for endpoint 'test-icinga2-client01' in zone 'test-icinga2-client01'.

[2022-09-14 11:20:15 +0200] information/ApiListener: Finished syncing endpoint 'test-icinga2-client01' in zone 'test-icinga2-client01'.

[2022-09-14 11:20:26 +0200] information/WorkQueue: #8 (ApiListener, SyncQueue) items: 0, rate: 0/s (0/min 0/5min 0/15min);

disconnect of the client

[2022-09-14 11:22:11 +0200] warning/JsonRpcConnection: API client disconnected for identity 'test-icinga2-client01'

[2022-09-14 11:22:11 +0200] warning/ApiListener: Removing API client for endpoint 'test-icinga2-client01'. 0 API clients left.

Master configuration:

I have created a zone per host in the zones.d with a separate services.conf file in which i can define my own checks for each host.

directory tree in /etc/icinga2

├── conf.d

│ └── [...]

├── constants.conf

├── features-available

│ └── [...]

├── features-enabled

│ ├── api.conf -> ../features-available/api.conf

│ ├── checker.conf -> ../features-available/checker.conf

│ ├── command.conf -> ../features-available/command.conf

│ ├── icingadb.conf -> ../features-available/icingadb.conf

│ ├── mainlog.conf -> ../features-available/mainlog.conf

│ └── notification.conf -> ../features-available/notification.conf

├── icinga2.conf

├── zones.conf

└── zones.d

├── test-icinga2-client01

│ └── services.conf

└── test-icinga2-client02

└── services.conf

cat /etc/icinga2/zones.conf

I know this is not the place for templates ![]()

template Host "generic-vars-host" {

check_command = "cluster-zone"

max_check_attempts = "3"

check_interval = 30s

retry_interval = 10s

}

object Endpoint "test-icinga2-master01" {

host = "192.168.xxx.xxx"

}

object Zone "master" {

endpoints = [ "test-icinga2-master01" ]

}

// Endpoints & Zones

object Endpoint "test-icinga2-client01" {

log_duration = 0s

}

object Zone "test-icinga2-client01" {

parent = "master"

endpoints = [ "test-icinga2-client01" ]

}

// Host Objects

object Host "test-icinga2-client01" {

import "generic-vars-host"

zone = "test-icinga2-client01"

display_name = "test-icinga2-client01.test.local"

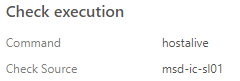

command_endpoint = "test-icinga2-client01"

}

// Endpoints & Zones

object Endpoint "test-icinga2-client02" {

log_duration = 0s

}

object Zone "test-icinga2-client02" {

parent = "master"

endpoints = [ "test-icinga2-client02" ]

}

// Host Objects

object Host "test-icinga2-client02" {

import "generic-vars-host"

zone = "test-icinga2-client02"

display_name = "test-icinga2-client02.test.local"

command_endpoint = "test-icinga2-client02"

}

object Zone "global-templates" {

global = true

}

cat /etc/icinga2/zones.d/test-icinga2-client01/services.conf

object Service "disk" {

import "generic-service"

host_name = "test-icinga2-client01"

check_command = "disk"

}

object Service "apt" {

import "generic-service"

host_name = "test-icinga2-client01"

check_command = "apt"

}

object Service "icinga" {

import "generic-service"

host_name = "test-icinga2-client01"

check_command = "icinga"

}

object Service "load" {

import "generic-service"

host_name = "test-icinga2-client01"

check_command = "load"

}

object Service "procs" {

import "generic-service"

host_name = "test-icinga2-client01"

check_command = "procs"

}

object Service "swap" {

import "generic-service"

host_name = "test-icinga2-client01"

check_command = "swap"

}

object Service "users" {

import "generic-service"

host_name = "test-icinga2-client01"

check_command = "users"

}

the same configuration applies to the second host (with different host_name).

Client configuration

To prevent a direct ICMP connection (for checks like hostalive), I ran the following command on the clients:

iptables -A INPUT -s 192.168.xxx.xxx(master ip-address) -p icmp --icmp-type echo-request -j DROP

cat /etc/icinga2/zones.conf

object Endpoint "test-icinga2-master01" {

host = "192.168.xxx.xxx"

port = "5665"

}

object Zone "master" {

endpoints = [ "test-icinga2-master01" ]

}

object Endpoint "test-icinga2-client01" {

}

object Zone "test-icinga2-client01" {

endpoints = [ "test-icinga2-client01" ]

parent = "master"

}

cat /etc/icinga2/features-enabled/api.conf

object ApiListener "api" {

accept_config = true

accept_commands = true

}

If you need more information please let me know.

I would be very grateful to hear from you,

Jan

System-Information:

- icinga2 version: (version: r2.13.5-1)

- PHP version: PHP 8.1.9

- Platform: Debian GNU/Linux

- Platform version: 11 (bullseye)

- Kernel version: 5.10.0-17-amd64

- Architecture: x86_64